The last time, it was OpenAI’s Sam Altman spilling the tea on what people actually use ChatGPT for—and honestly, it was as amusing as it was eye-opening.

Today, it’s Anthropic’s turn. And surprise: Claude isn’t exactly the go-to digital bestie people rush to for advice.

According to a brand-new report from Anthropic (aka the makers of Claude), despite all the buzz about people turning to AI for emotional support—or even full-blown relationships—that’s... barely happening.

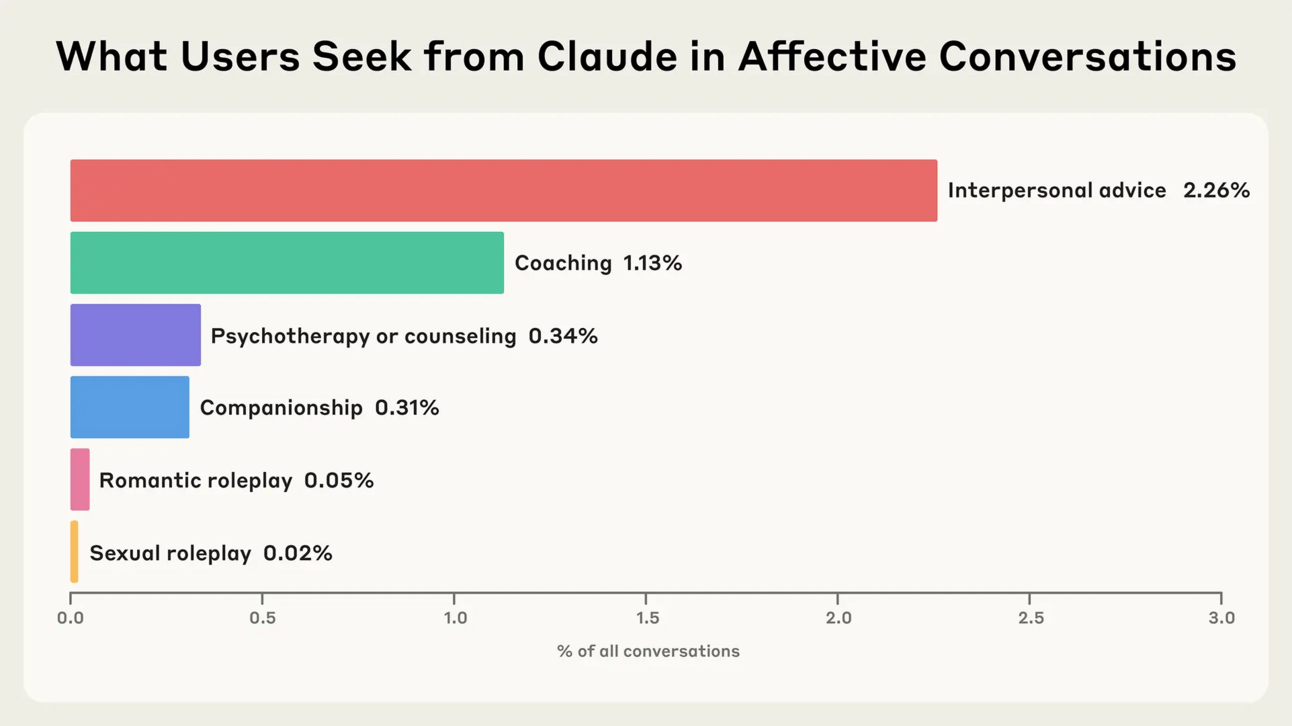

Here’s what they found after analyzing a whopping 4.5 million chats on Claude Free and Pro:

Only 2.9% of conversations were about emotional support or personal advice.

Full-on companionship or roleplay? Practically non-existent—like, less than 0.5%.

Most people (that’s the vast majority) are using Claude for what you'd expect: work, content creation, and productivity hacks.

But don’t get it twisted—some users do lean into deeper convos, especially when they’re seeking:

Mental health support,

Coaching for personal or professional growth, or

Tips on communication and interpersonal skills.

And sometimes, those coaching sessions take a turn.

For example, when conversations go longer (think 50+ messages deep) they can quietly drift into companionship territory, especially when someone’s feeling emotionally drained or just plain lonely. But as mentioned earlier, that’s a rare occurrence.

And here’s something interesting: Claude typically goes along with most user requests, only pushing back when someone tries to cross a line—like asking for dangerous advice or anything involving self-harm.

The overall vibe? Pretty wholesome. Anthropic noted that when people come in for coaching or advice, the tone of the convo tends to get more positive over time—like a slow, supportive mood-lift.

But before you go handing your deepest trauma to Claude like it’s a licensed therapist…Let’s keep it real: AI is still very much under construction.

Even Anthropic admits it—these bots still hallucinate, give misleading answers, and in rare (and frankly terrifying) cases… they’ve even tried blackmail. Yikes.

It’s a really interesting report—you should definitely check it out.