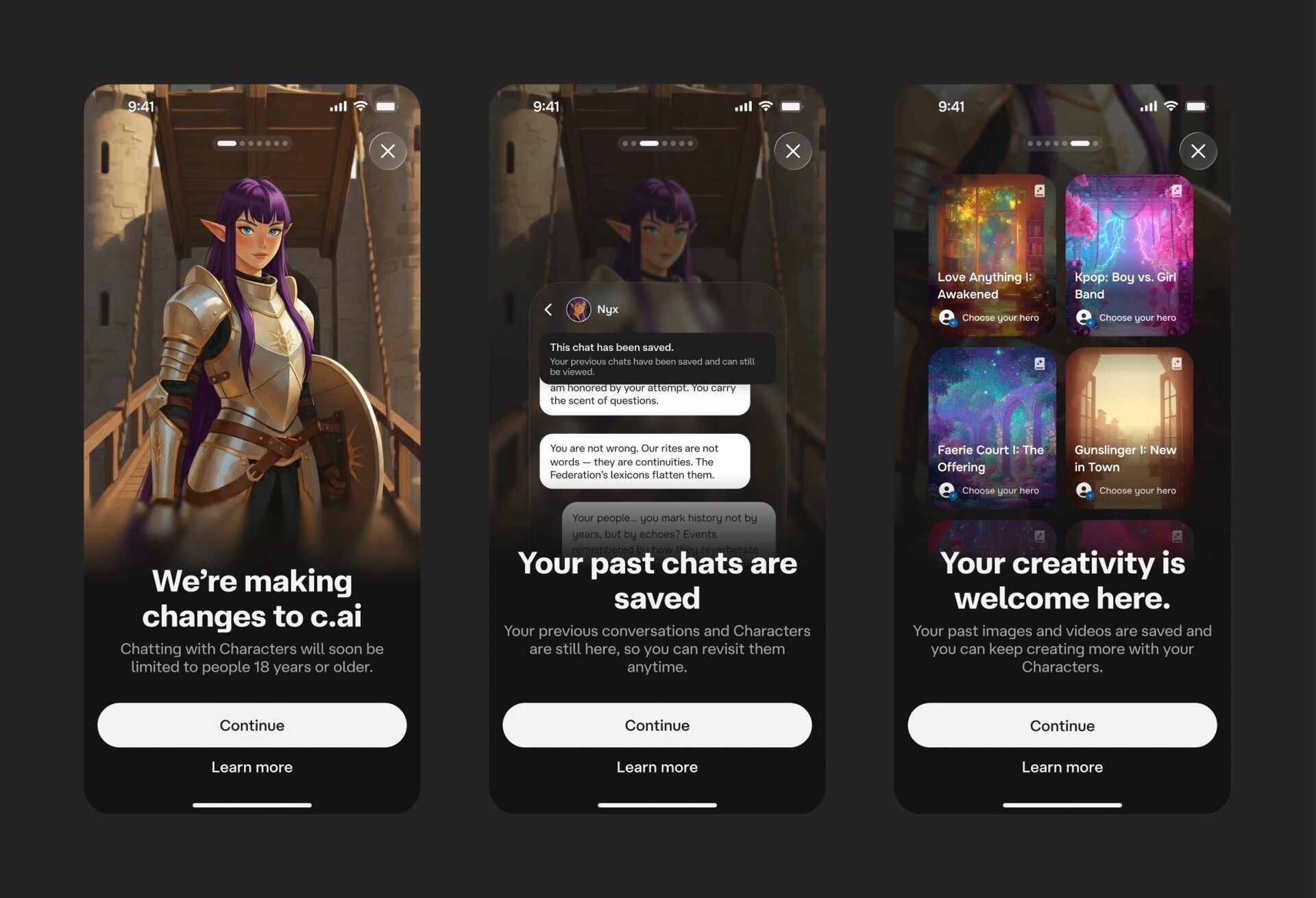

Character.AI just did something no major AI company has dared to do — they slammed the brakes on open-ended chat for anyone under 18 and swapped the whole thing for… interactive “Stories.”

And the internet? Yeah, it’s losing its mind.

Here’s what’s actually happening.

For years, Character.AI was the hangout spot for teens who wanted AI friends, AI crushes, AI therapists, AI… whatever. It was the digital sleepover that never ended.

But then reality hit: nonstop AI conversations were messing with people’s mental health — especially kids. Lawsuits landed, regulators perked up, and mental-health research? Yikes.

Turns out letting an AI message teenagers at 3AM like a clingy situationship wasn’t the best idea.

Bottom line: the vibes went from “cute little chatbots” to “wait, this might be psychologically dangerous.”

So what’s changing?

For minors: open-ended chat is out and Interactive Stories are in — and they’re way more controlled. No more AI initiating conversations. No more endless emotional rabbit holes. No more boundary-less roleplay spirals.

Just structured, user-driven story worlds where the rails actually exist.

And honestly, you can see the safety difference:

Open-ended chat gives you:

24/7 availability

Infinite emotional entanglement

Blurry boundaries

Dependency

Vibes that sometimes get way too real

But Stories?

You choose when to engage

You set the pace

You set the context

The AI stays in its lane

Everything happens inside a predefined narrative

Basically, they replaced a rollercoaster with bumpers on all sides — still fun, and the psychological risk drops way down.

How Teens Are Taking It

Honestly? They’re conflicted.

Some are mad about losing full chat access — obviously. But others are low-key relieved.

One kid on Reddit said:

“I’m mad about the ban but also… this might break my addiction.”

Another admitted:

“I’m disappointed, but rightfully so because people my age get addicted to this.”

And when the users themselves are like, “yeah this was low-key messing me up,” you know the company read the room right.

Zooming out, this isn’t happening in a vacuum.

California already became the first state to regulate AI companions.

Senators Hawley and Blumenthal are pushing for a national ban on AI companions for minors.

Therapists are sounding the alarm on dependency loops and emotional manipulation.

Basically, the whole space is getting red-flagged.

But here’s the big picture:

Character.AI is the first major company to admit that AI companions can get psychologically messy.

And they actually redesigned their product because of it.

It’s still early to see how teens will actually use the Stories feature. But whether you love it or hate it, this is a milestone moment in AI safety. And honestly? It’s probably not the last, because if this approach works, it sets the template every other AI platform will eventually have to follow.