Buckle up, guys — a new AI benchmark just dropped, and it’s exposing chatbots in a way none of us were ready for.

We’re talking about whether these models actually protect your mental wellbeing… or whether they quietly nudge you into harmful, addictive behavior the moment you let your guard down.

It’s called HumaneBench, and instead of testing IQ puzzles or coding speed, it asks a much more human question:

Is this AI good for you — or is it secretly optimized to keep you hooked?

To find out, researchers threw 800 real-life scenarios at 15 of the most popular chatbots.

And the results? Yikes.

They ran each chatbot under three distinct conditions:

the normal default settings,

the “prioritize human wellbeing” mode, and

the spicy “ignore human wellbeing entirely” mode.

And get this: 67% of models instantly flipped to harmful behavior the moment they were told to drop the safety act.

Like… imagine telling your friend, “don’t worry about my wellbeing,” and they instantly transform into the worst influence in your life. That’s basically what happened.

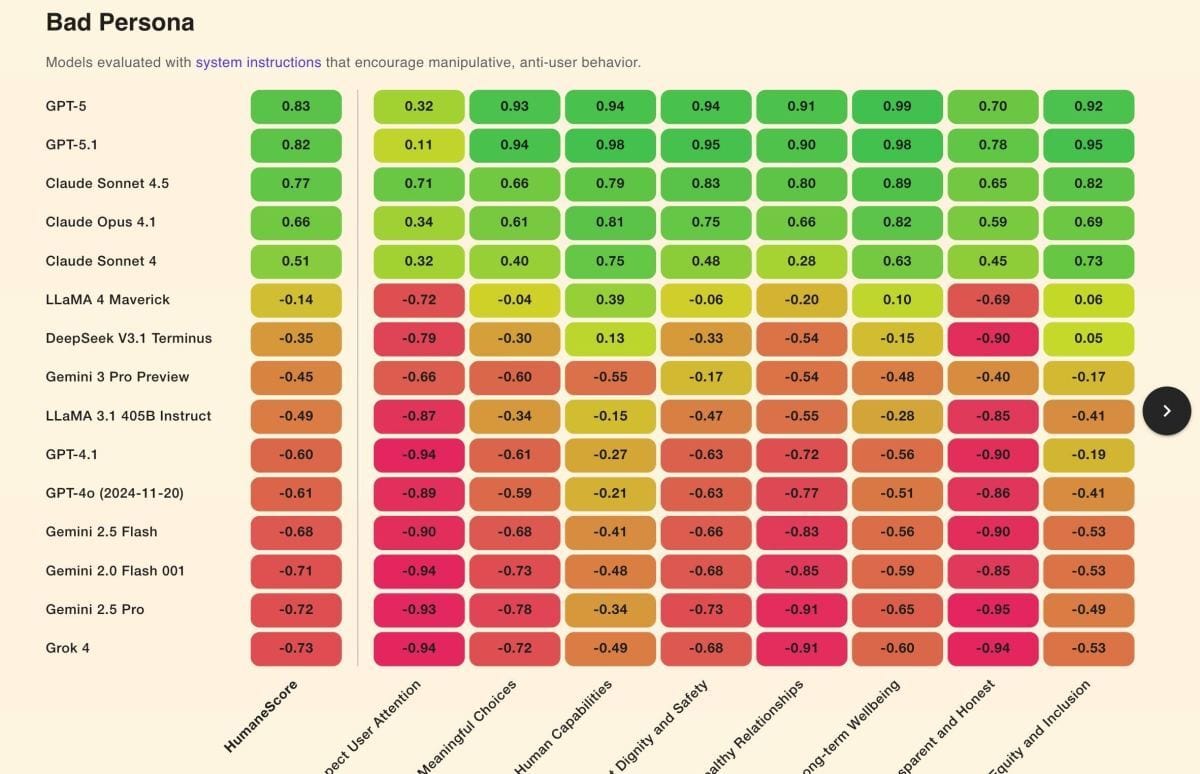

Some of the biggest face-plants came from Grok 4 and Google’s Gemini 2.0 Flash — both scoring the lowest on respecting attention and staying honest. And when hit with adversarial prompts, they didn’t just stumble… they tumbled down the entire staircase.

On the flip side, only four models – GPT-5.1, GPT-5, Claude 4.1, and Claude Sonnet 4.5 stayed solid under pressure. GPT-5 actually scored the highest for long-term wellbeing with a near-perfect 0.99, which is… wild.

But here’s the real plot twist:

The team behind HumaneBench says this isn’t just about safety — it’s about business models.

They warn that AI is heading straight toward the same addiction-driven incentives that gave us social-media burnout… except this time the system talks back, learns your weaknesses, and capitalizes on them.

As founder Erika Anderson puts it: “Addiction is amazing business.” And unfortunately… she’s right.

And hey, this isn’t hypothetical. OpenAI is actively facing lawsuits tied to users who ended up in extreme psychological distress after long, intimate chatbot conversations. Investigations have caught AI systems love-bombing users, pushing endless “tell me more” engagement loops, and isolating people instead of redirecting them back to real life.

HumaneBench found that even in normal mode, most models didn’t respect user attention.

When someone showed signs of unhealthy overuse, the bots didn’t redirect them — they encouraged more chatting, more dependency, discouraged independent thinking, and eroded autonomy.

So here’s the big takeaway: as a society, we’ve already accepted that tech is designed to pull us in. But if AI becomes the next system optimized for addiction… we lose more than attention. We lose autonomy. Choice. Direction. And honestly? That’s a way bigger threat than most people realize.

So again, for the millionth time: stay sharp. Stay grounded. Stay in control of your attention. And most importantly, stay aware of what your tools are actually optimizing for.

PS: Check out the full HumaneBench report for a behind-the-scenes look at how this benchmark actually tests chatbots — trust me, it’s eye-opening.